This article was originally published on 15th May 2025 and was written by a former employee of Netizen eXperience, Tey Swan Ling.

Throughout this series, Swan Ling has tested Gemini Advanced across the end-to-end UX research process, from planning with the CARE framework to streamlining recruitment logistics. Most recently in Phase 3, she explored Gemini’s role in crafting discussion guides and acting as a silent partner during live sessions via Google Meet. While the AI required human guidance to refine question flow, it impressed with its contextual ability to decipher mixed-language nuances (“bahasa campur”) during transcription. Now, the study moves to its most complex challenge yet: analyzing the data to build accurate user personas.

Phase 4: Gemini and the Great Persona Puzzle - My Analysis Adventure

Phase 4 marks the exciting (yet slightly daunting) transition to data analysis. This is where the real puzzle-solving begins: the team deep-dives into participants’ responses, hunting for patterns, spotting key differences, and making those crucial calls on how to cluster users into meaningful personas (and when a group deserves its own spotlight!).

This collaborative sprint typically takes 3-5 working days before the team is ready to present the user personas to inform design and business strategies. Given Gemini's strong support in Phase 3 during the discussion guide development, I was eager to see its role in this critical analytical stage.

Time-Efficiency Gains in Data Analysis

A primary benefit of using Gemini was yet again, how much time it saved me. The typical 1-2 day process for just reviewing raw data, identifying patterns, and synthesizing findings into potential personas was reduced to half a day. To illustrate this further, let’s look into my step-by-step approach to using Gemini for this analysis.

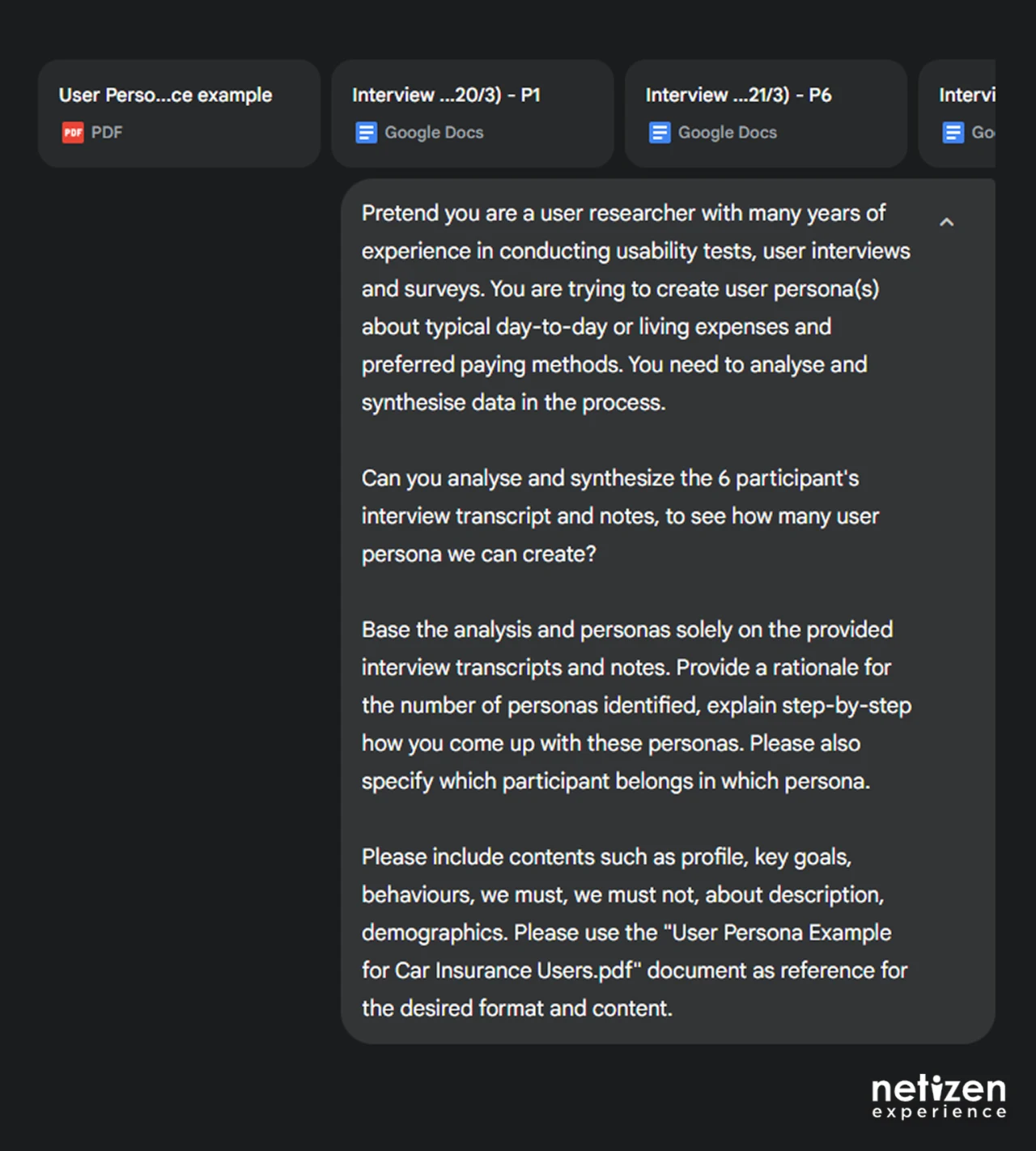

Step 1: Prompt Engineering: Guiding Persona Generation

As you already know, a lot of what really made Gemini work is in how one “talked” to it. Yup, the CARE prompt comes into play again. After all, my prompts are the instructions that guide Gemini in its analysis and keep it within the parameters of my user persona development. To start of Phase 4, I first formulated a detailed CARE prompt to instruct Gemini to generate me some recommended user personas.

To start off Phase 4, I first formulated a detailed CARE prompt to instruct Gemini to generate me some recommended user personas.

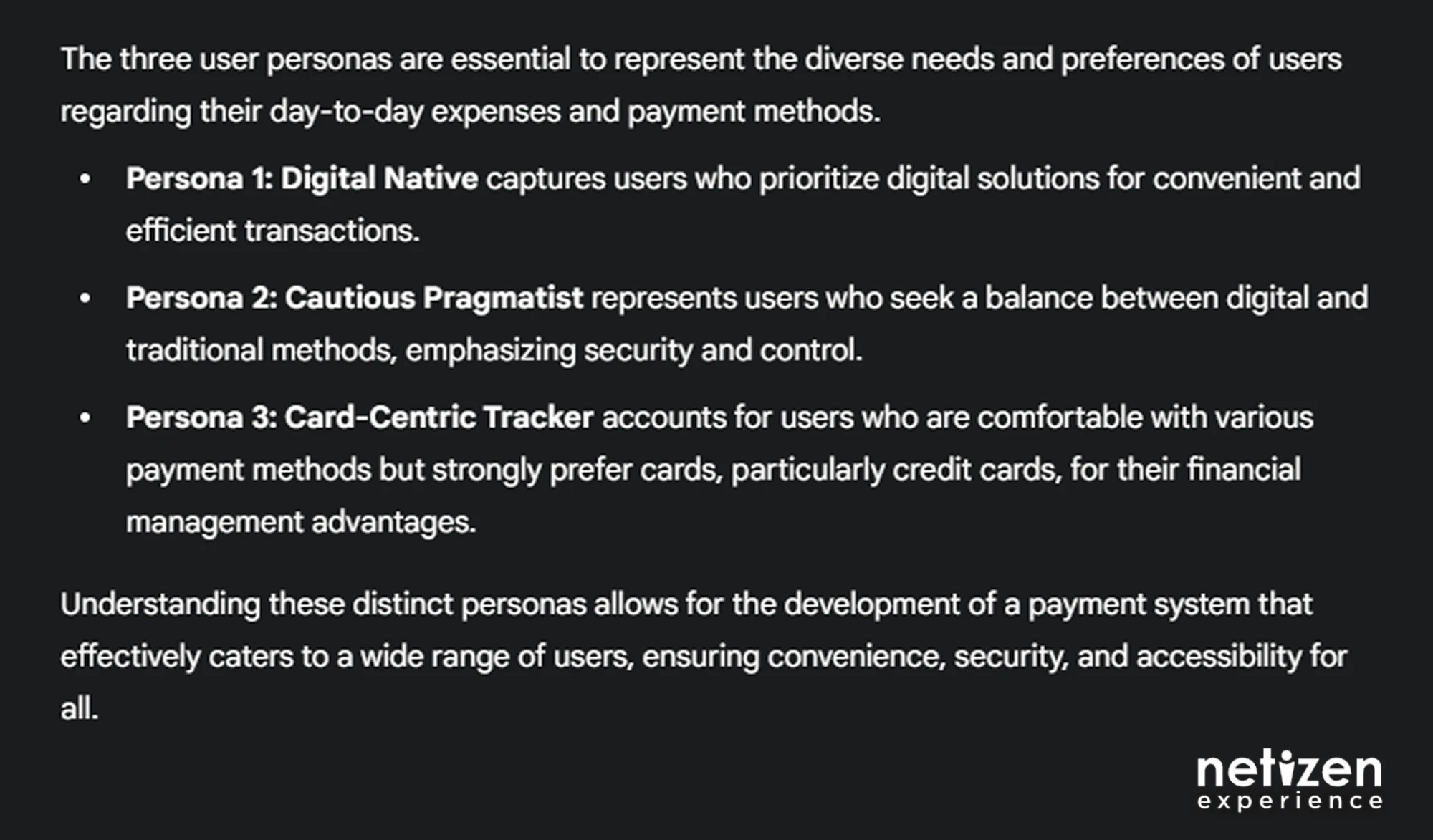

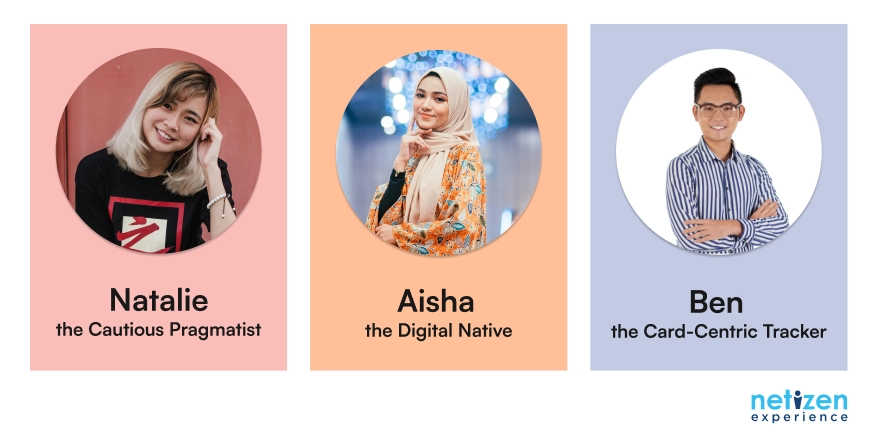

Gemini then suggested three distinct user personas for me:

Upon quick reading, the initial user personas generated were insightful as each one felt distinct, like a real person with their own quirks and motivations.

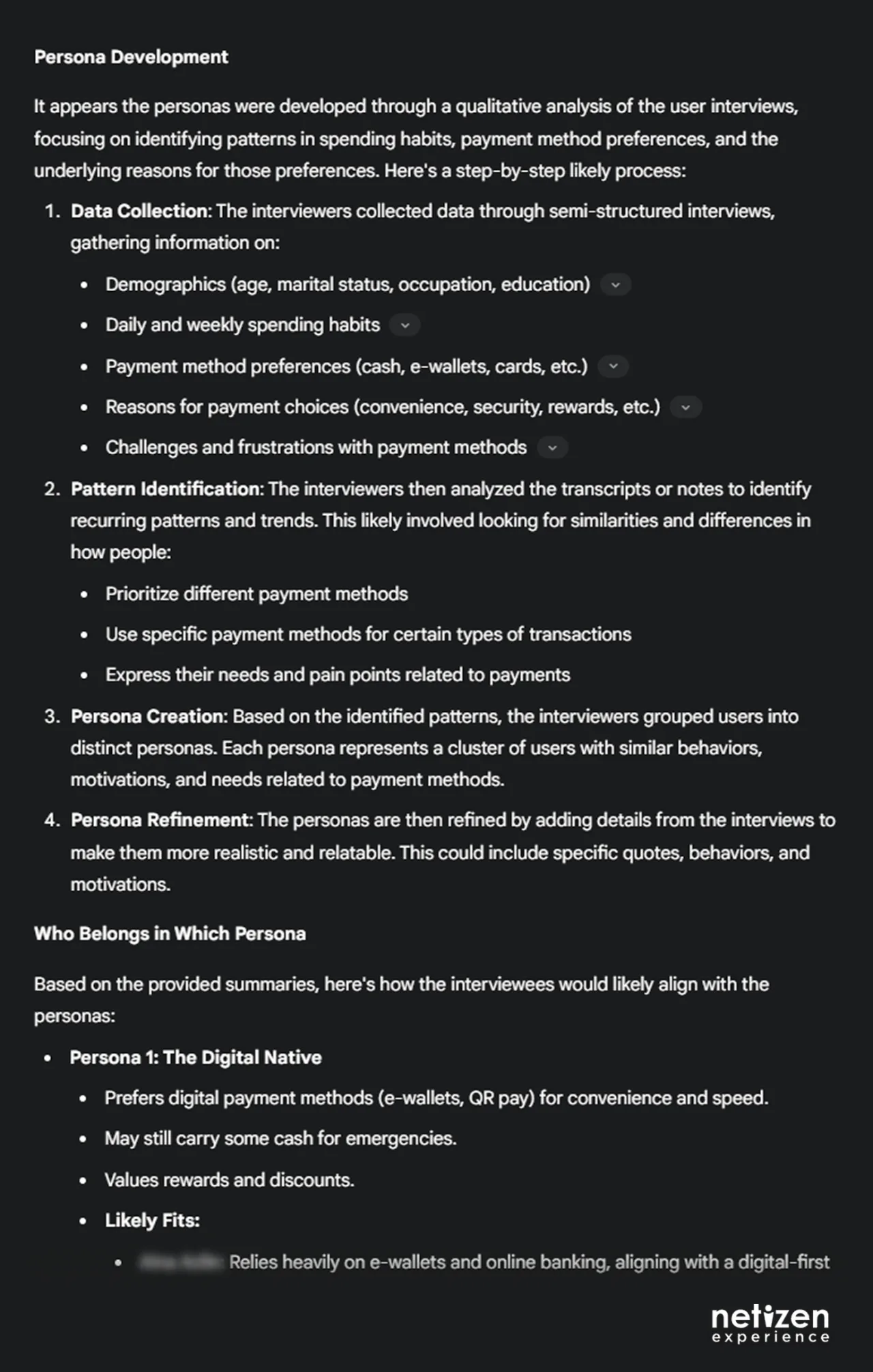

Step 2: Analyzing Gemini’s Reasoning: Deconstructing Persona Generation

Then, to make sure Gemini’s recommended user personas were not just AI “hallucinations” or guesswork, this round, I put on my “skeptical researcher” hat and did my own analysis. I went back to the transcripts to see if its logic held up on things like:

- Why it proposed three user personas

- The steps it took to arrive at that conclusion

- Reasoning for matching my participants to those user personas

I also probed Gemini on any statements that I had doubts or was unclear about. Overall, for most parts, the information was accurate, convincing even. Gemini could justify why it categorized the persona the way it did.

Step 3: Resolving Persona Ambiguity with Gemini’s Guidance

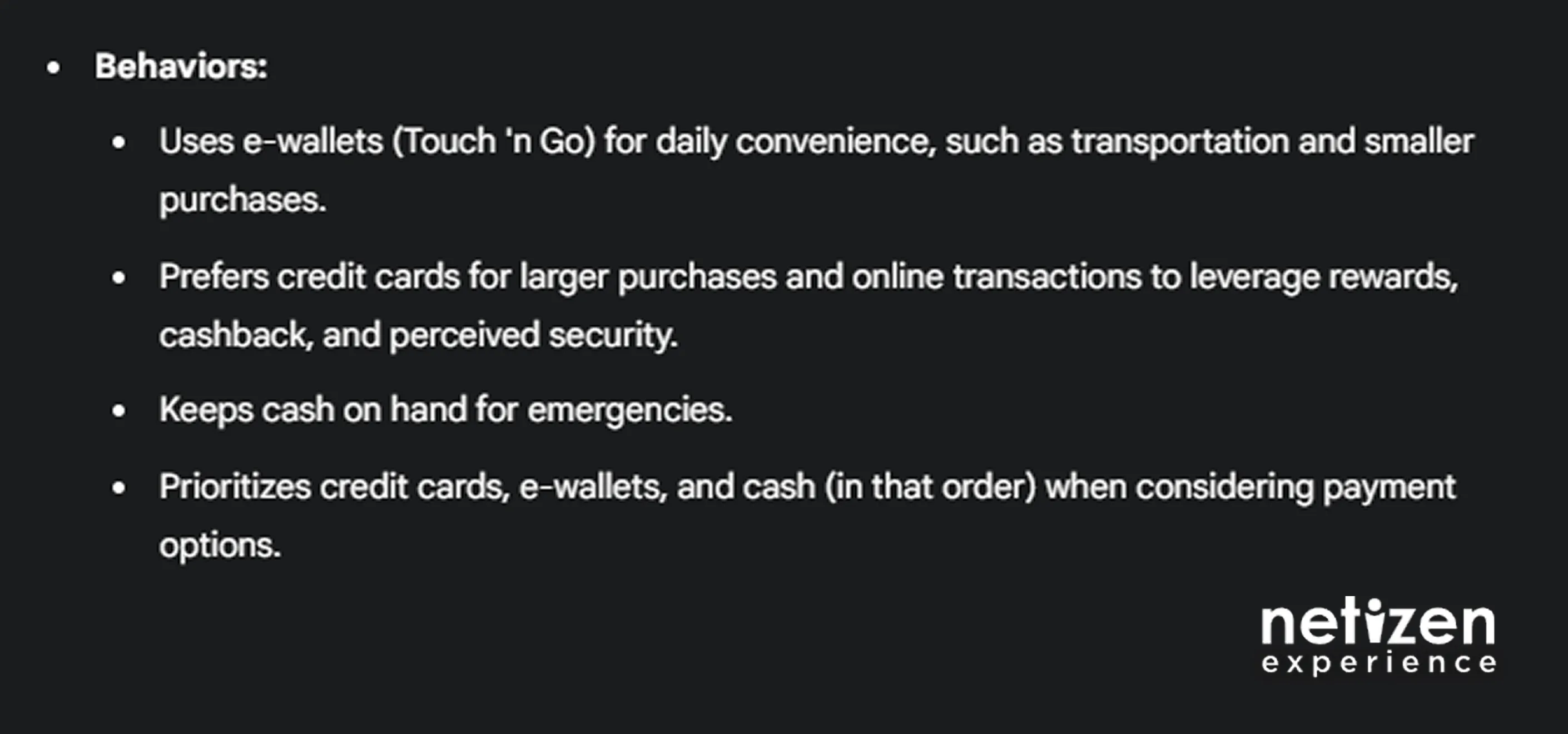

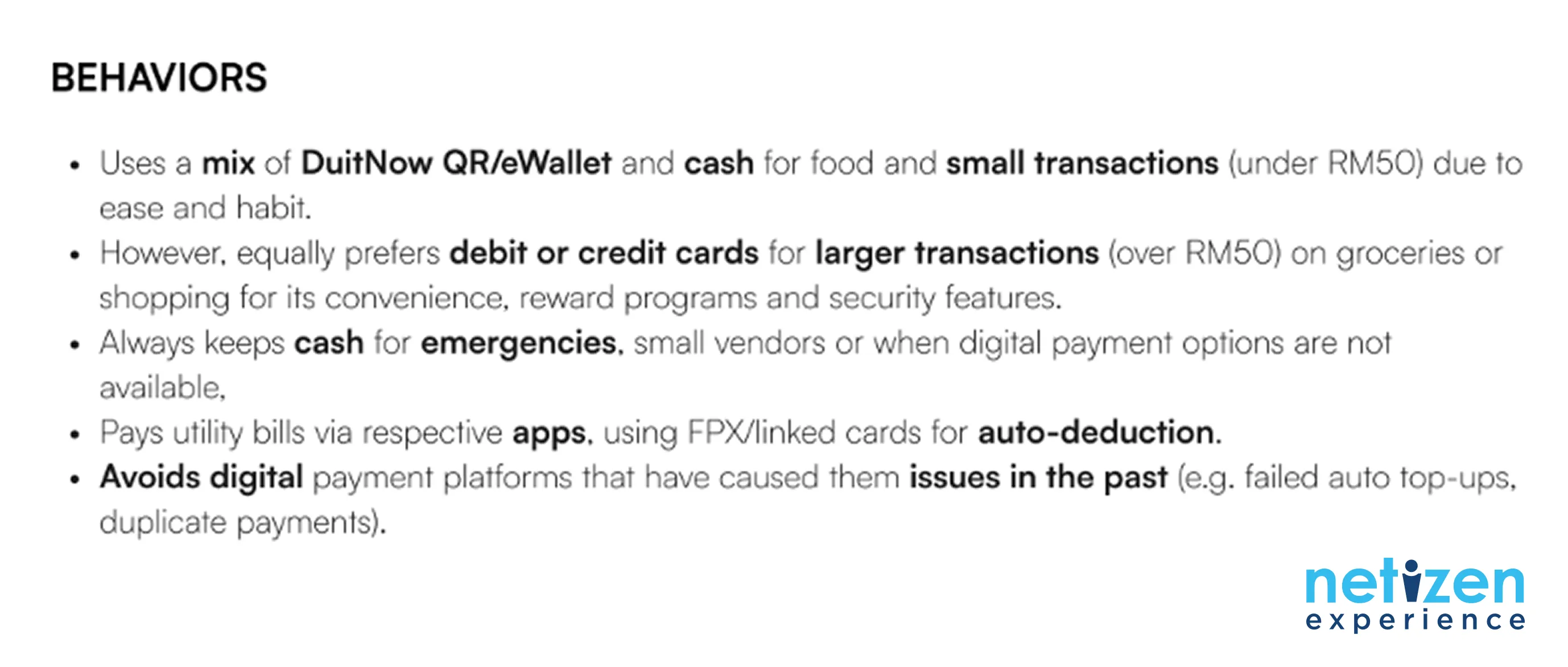

Let’s start with good things about Gemini first: in most cases, Gemini was spot-on. The user personas closely align with my own analysis. My final outcome also revealed three distinct user personas, shaped by their preferred payment methods and underlying attitudes.

[Note: These visual elements of these personas were created by yours truly (and stock photos!) and are not generated by Gemini or any other AI]

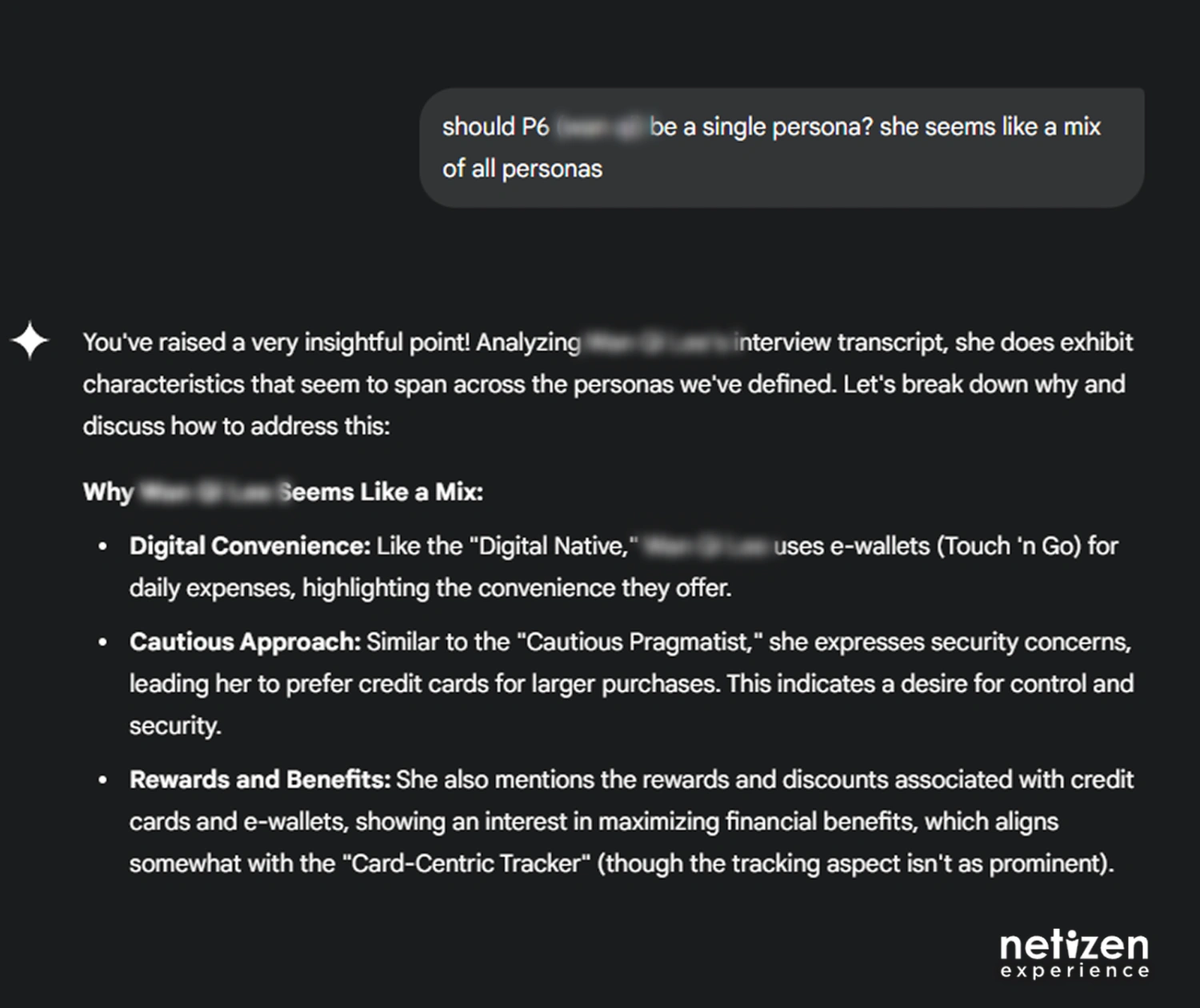

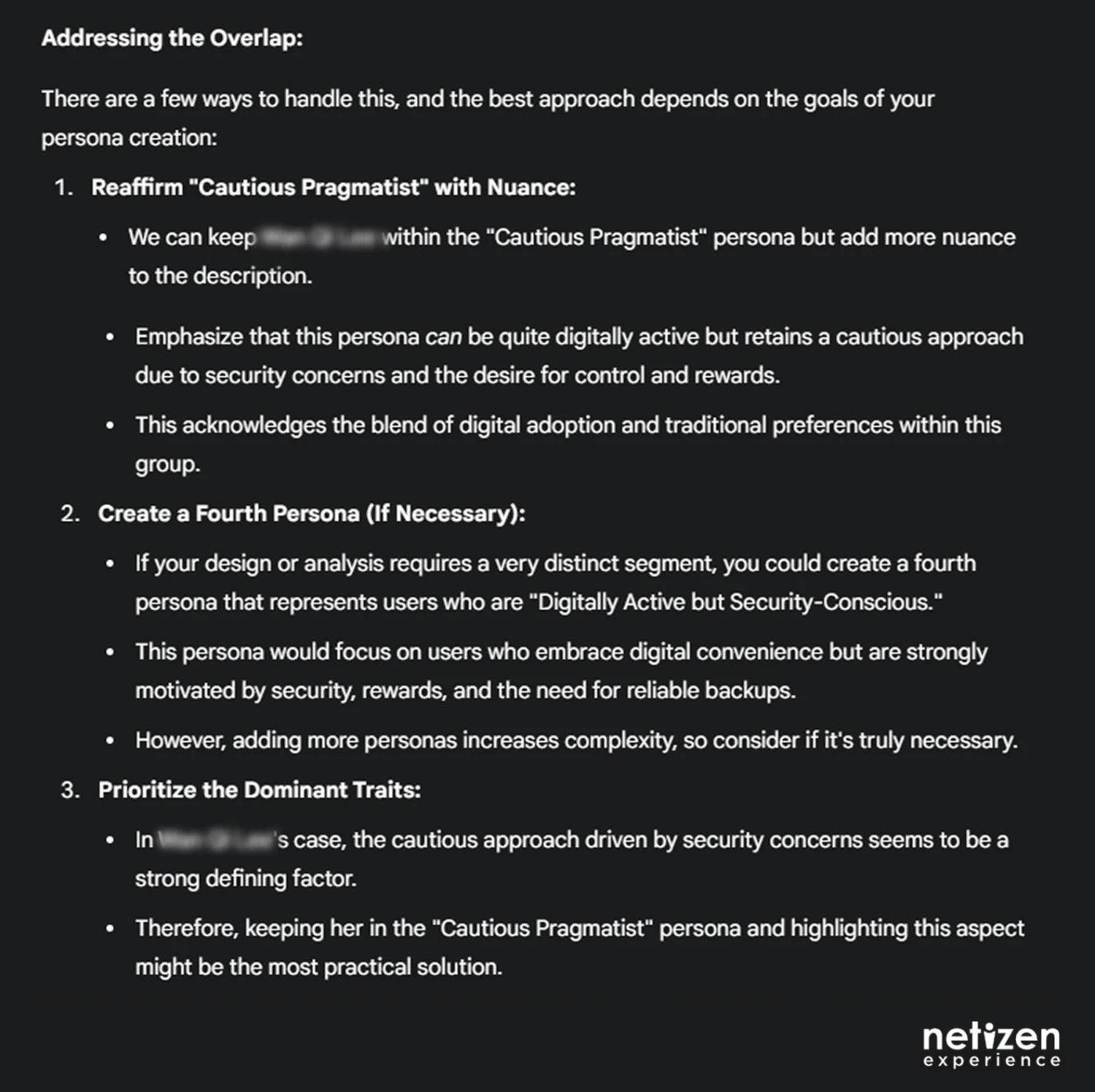

A real turning point in my analysis was realizing Gemini’s potential as a true research partner. During the process, I was stuck categorizing a colleague who used digital payments (like a Digital Native) but is also worried about security for big purchases (like a Cautious Pragmatist).

Unsure how to proceed because I was contemplating creating a fourth persona, I decided to bounce the dilemma off with Gemini. It was in that moment, that I saw the analytical value I’d been missing, realizing that not every interaction needed to follow a formal prompt framework. Here’s what happened when I directly asked Gemini for its opinion:

It explained why she appears to fit both Digital Native and Cautious Pragmatist personas, then suggested how to handle the overlap and provided recommendations for the next steps. With the elaborate explanation, I ultimately categorized her as a Cautious Pragmatist, focusing on her underlying attitudes.

This moment crystallized for me how Gemini could serve as a supportive research companion, efficiently summarizing contents and helping me see patterns more clearly to make confident, informed decisions.

Human Validation: the Importance of Verification

Okay, so here comes the “but”.

Even with my confidence in Gemini growing, as researchers, we have a responsibility to ensure accuracy and validity of the results. After my deep-dive into Gemini’s “thought” process and comparing its findings with mine, it also revealed a limitation; where are the finer nuances?

While Gemini’s analytical strengths were evident, its difficulty with subtle details when trying to be concise showed why human intuition is still important:

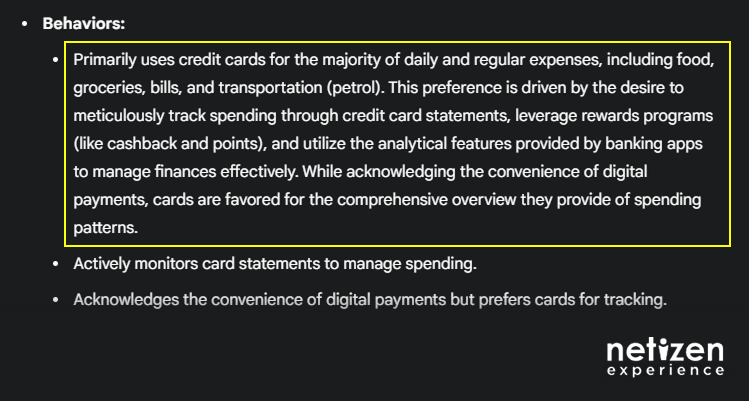

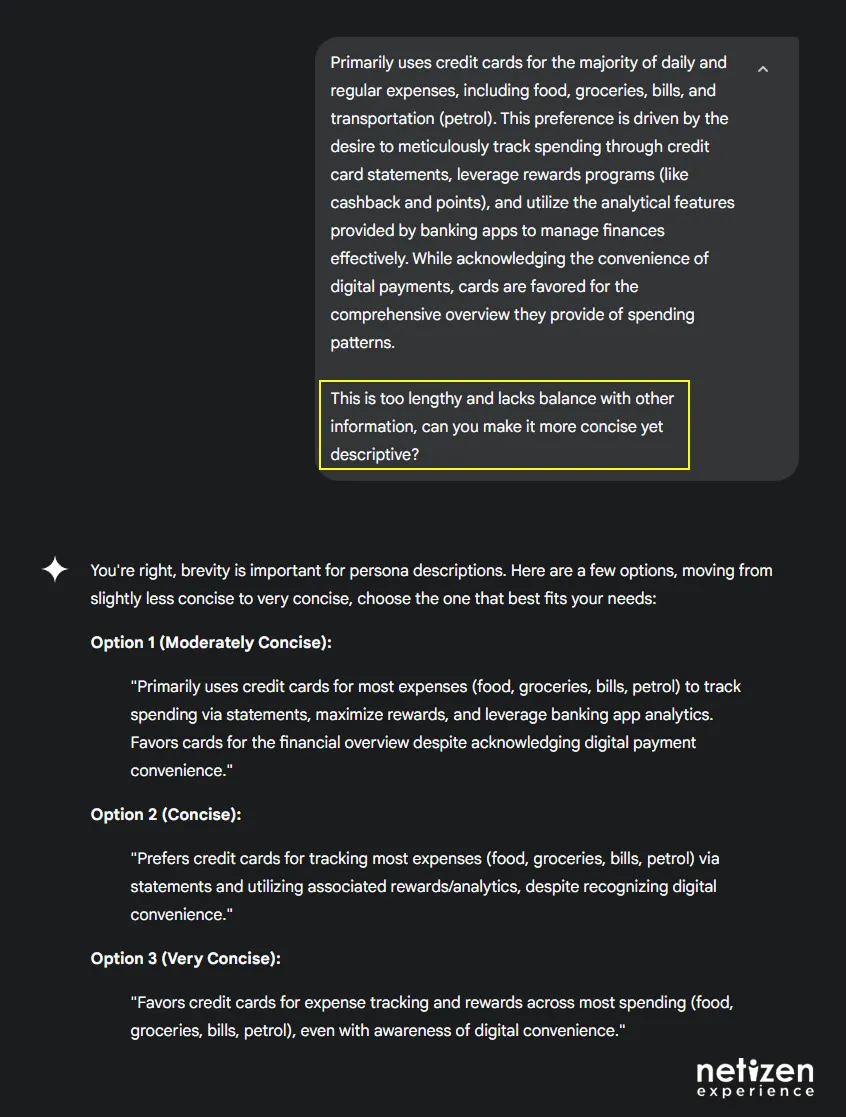

- Lack of rich and vivid details: I needed to ask clarifying questions about transaction specifics or motivations. For example, I wanted to know what were the examples of “wide range of transactions” Ben used for his credit card. While I can manually retrieve or request Gemini to provide me this missing context, doing so for every sentence felt counterproductive and detracted from the initial efficiency gains.

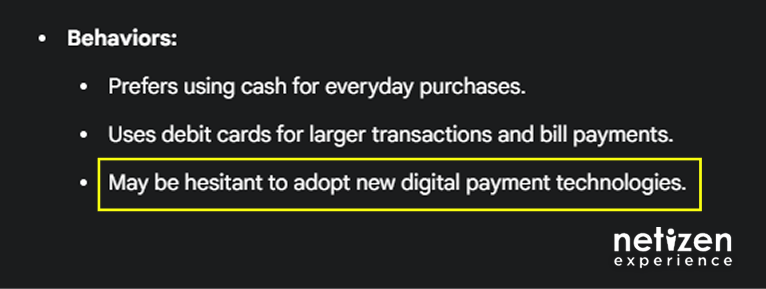

- AI Oversimplication: I also noticed that Gemini's analysis displays a tendency to oversimplify information. It described Ben as hesitant to adopt digital payments, even though my notes showed he was actually quite open to them, but having specific reasons for his strong credit card preference. Fortunately, Gemini is more than capable to provide additional analysis and revert its statement to align more closely with Ben’s true behavior.

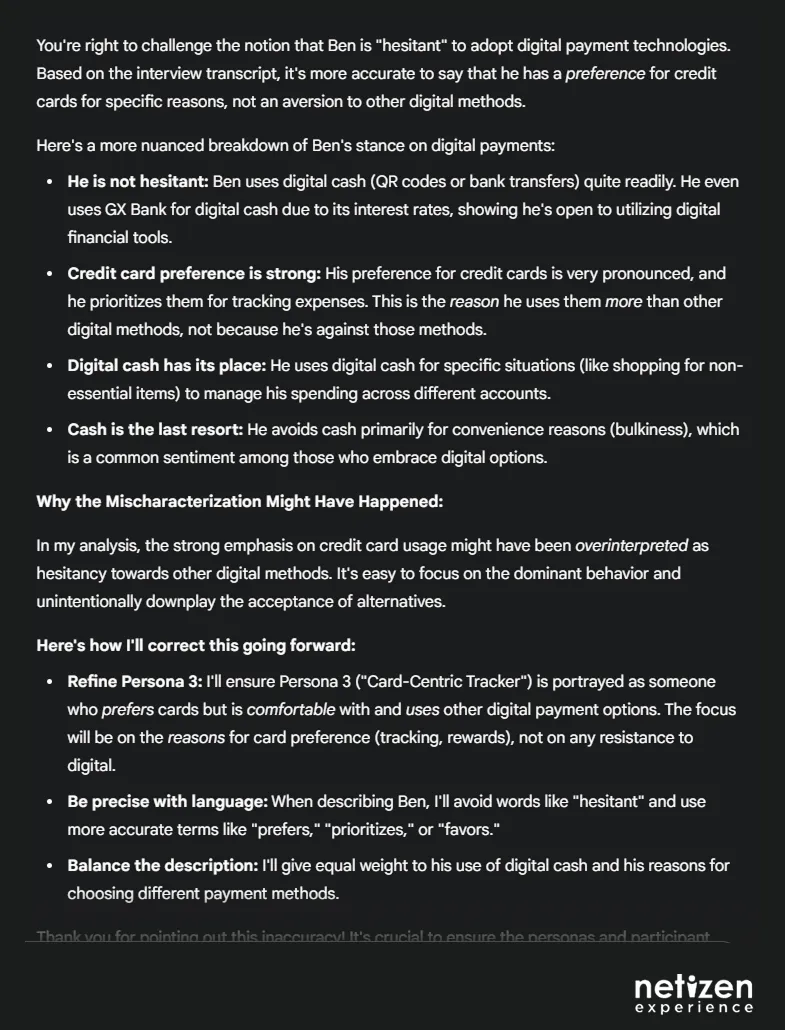

Phase 4 showed that while Gemini can handle complex data, it still risks oversimplifying information, much like human researchers. To address this, here are some considerations that worked for me:

- Let Gemini handle the heavy lifting (data-wise): Leverage Gemini’s speed and efficiency to navigate through large volumes of data and extract key information.

- We decide on the details that matter: As the human researcher, determine the optimum level of detail for impactful findings, guided by context and goals.

- Let’s circle back to the word, collaboration: Combine Gemini’s power with our understanding to ensure accurate, relevant, and impactful outcomes.

Phase 5: Communicating and Presenting Findings

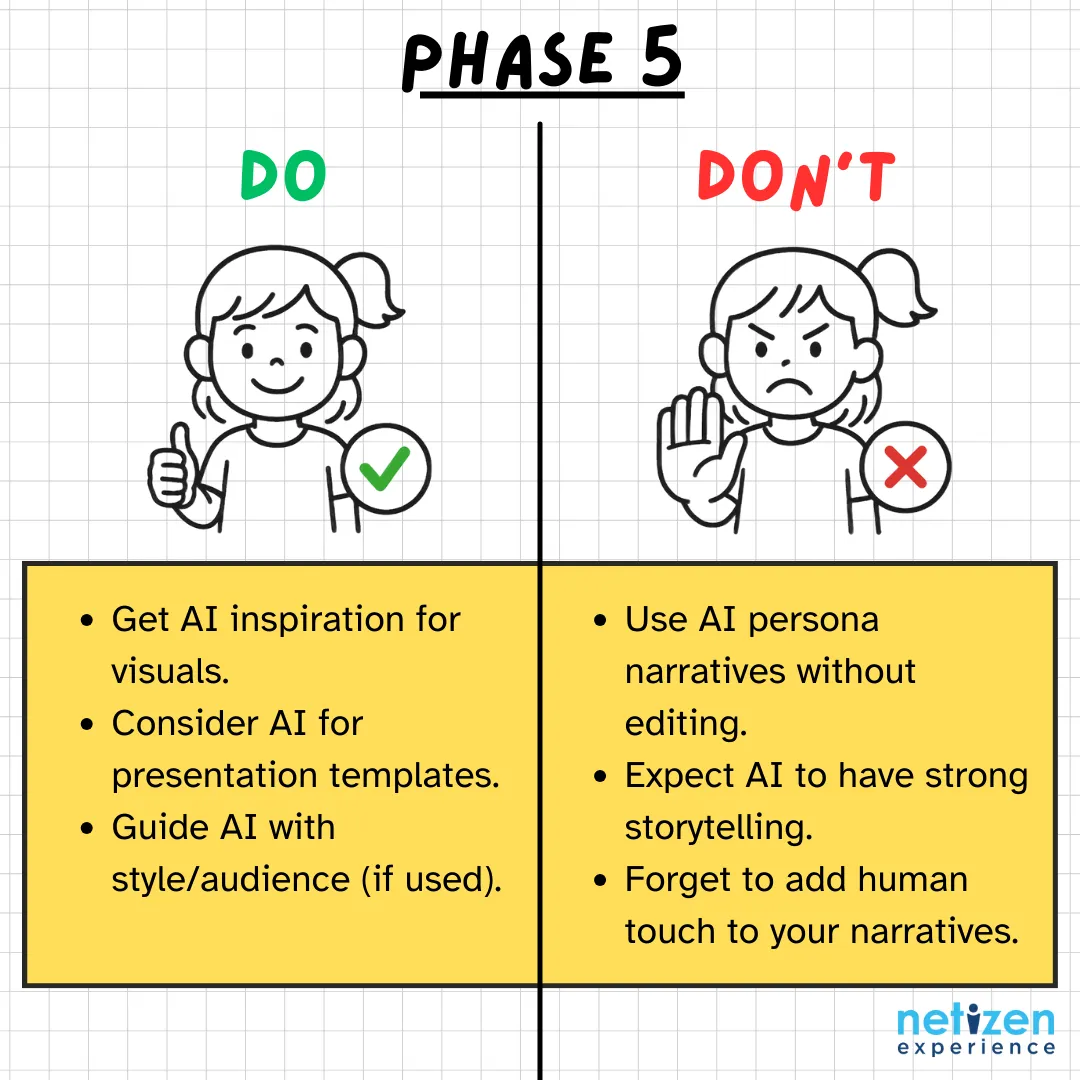

In this final phase, I did not use Gemini to prepare to communicate and present findings to stakeholders because it was an internal research study. I concluded the process with the creation of user persona documentations to be presented. At most, I explored and assessed how Gemini would communicate findings by looking at the narrative it crafted for the user persona contents.

Recapping on the risk of oversimplification and lack of nuances from Phase 4, I found Gemini’s initial output often lacked compelling storytelling. While the content might seem promising at first glance, experienced researchers would likely find the AI-generated personas vague and lacking sufficient narrative depth, raising concerns about directly extracting and using information generated by AI.

However, I do see AI’s potential to help in generating visual aids and creating relevant presentation templates. For this phase, I would suggest using Gemini or other AI tools for creative inspiration on how to best display information, both in text form and visually. Then, when preparing for stakeholder presentations, provide AI with clear style guidelines, reference materials, and audience personas, and refine with your own human touch, as we have discussed in a previous article.

Final Thoughts: My Take on AI for UX Research

Reflecting on my initial questions about AI’s speed, efficiency, and creativity in UX research, this opportunity gave me the answer I need:

- Speed: Undeniably, AI offers impressive immediate output!

- Efficiency: Subjective – while AI saves time in certain phases, the iterative refinement and clarification process often requires additional effort.

- Creativity: A valuable asset for ideation and exploring diverse perspectives.

Rightfully, my understanding of how best to use AI is still a work-in-progress, and I’m open to better ways to do so. But when it comes to its usefulness in UX research? I can confidently say, yes. Even if additional learning is necessary, I can see myself integrating AI into various parts of my UX research workflow.

The big question for me is still about trusting AI with understanding people and their nuances. It’s powerful, no doubt, but that human intuition to empathize still feels uniquely human. So, for now, I’m thinking the best way forward is embracing AI step-by-step and finding a comfortable balance, similar to the process of adopting any new tool or software.

Jumped straight to the insights? See how we built this study from scratch by catching up on Part 1 (Planning), Part 2 (Recruitment), and Part 3 (Fieldwork).